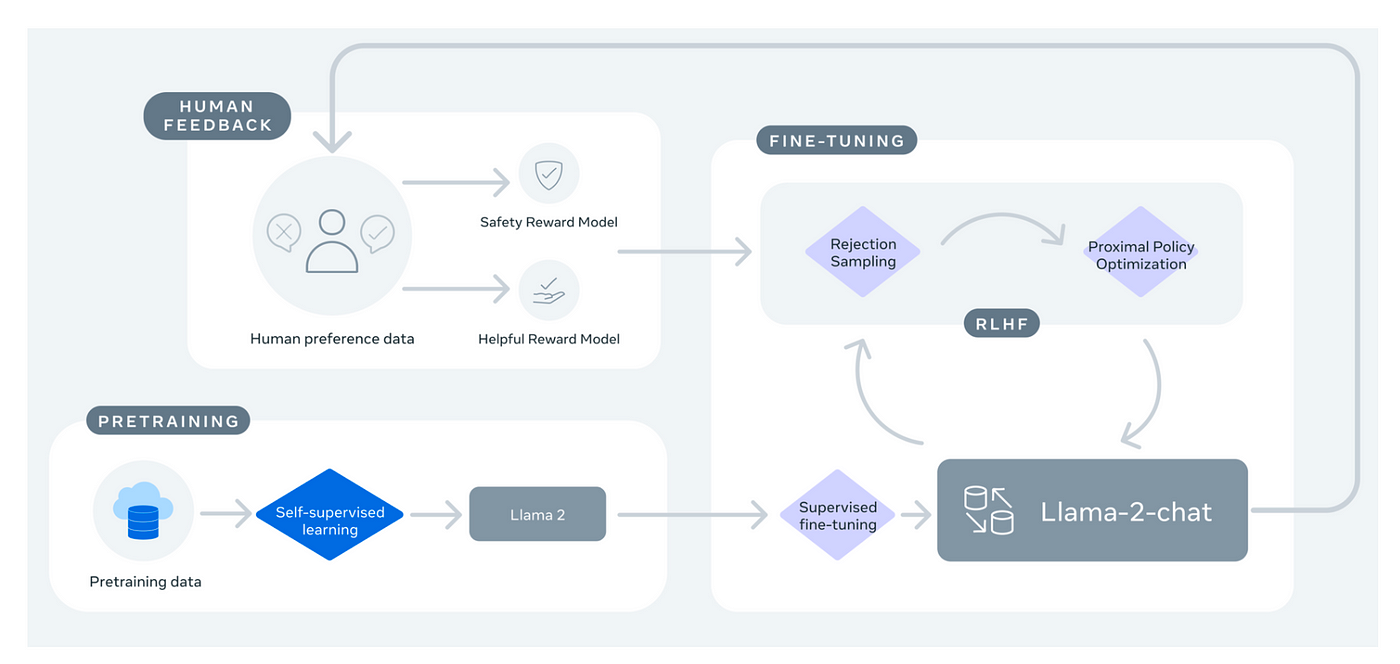

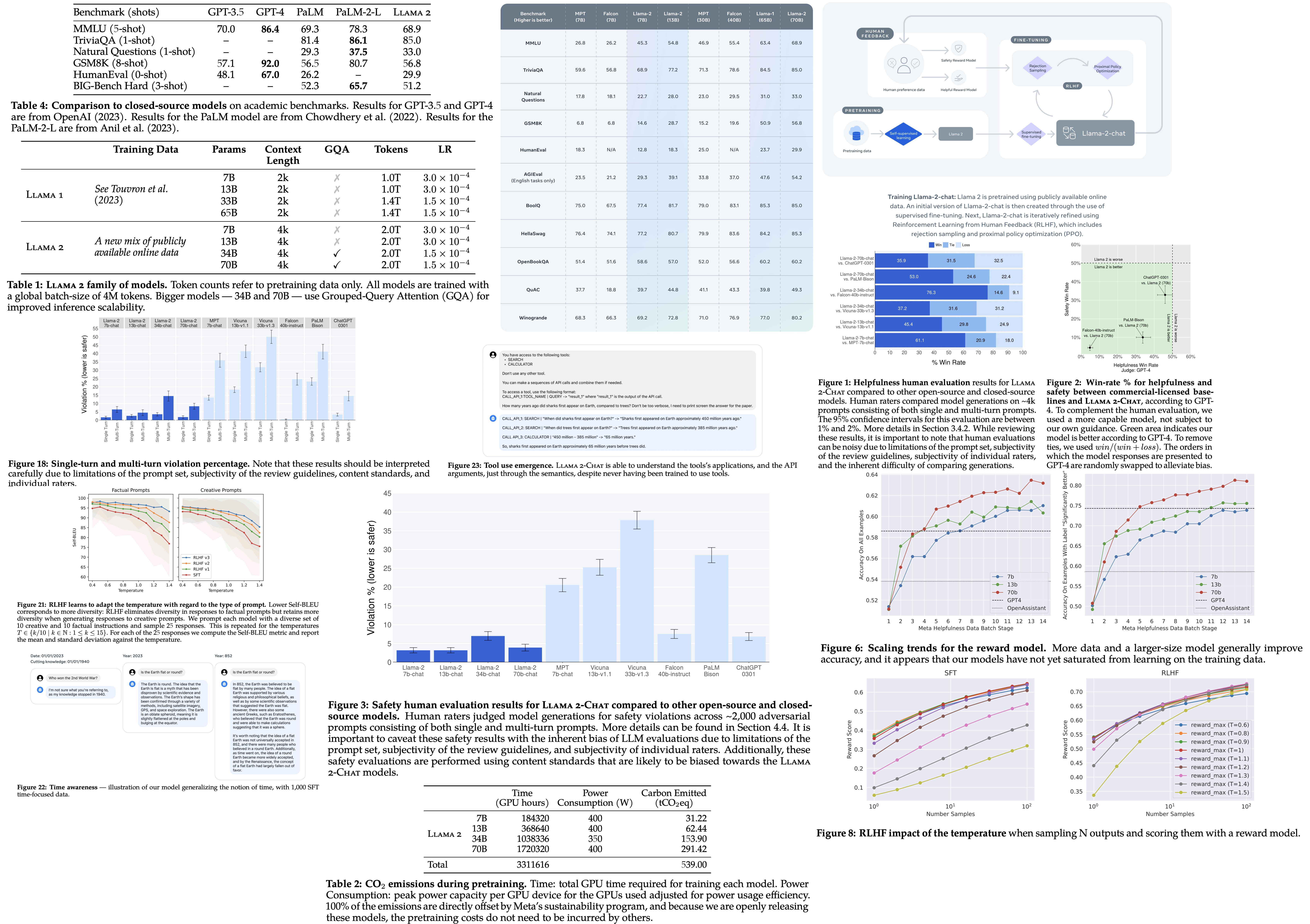

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Llama 2 was pretrained on publicly available online data sources The fine-tuned model Llama Chat leverages publicly available instruction datasets and over 1 million human annotations. Llama 2 was pretrained on publicly available online data sources The fine-tuned model Llama Chat leverages publicly available instruction datasets and over 1 million human annotations. Meta developed and publicly released the Llama 2 family of large language models LLMs a collection of pretrained and fine-tuned generative text models ranging in. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2..

Llama 2 Chat Llama 2 Is The Result Of The Expanded By Varun Mathur 𝐀𝐈 𝐦𝐨𝐧𝐤𝐬 𝐢𝐨 Medium

For an example usage of how to integrate LlamaIndex with Llama 2 see here We also published a completed demo app showing how to use LlamaIndex to. This manual offers guidance and tools to assist in setting up Llama covering access to the model hosting. Usage tips The Llama2 models were trained using bfloat16 but the original inference uses float16 The checkpoints uploaded on the Hub use torch_dtype. Our latest version of Llama Llama 2 is now accessible to individuals creators researchers and businesses so they can experiment innovate and scale their. Llama 2 Chat models are fine-tuned on over 1 million human annotations and are made for chat Open the terminal and run ollama run llama2..

Uses GGML_TYPE_Q4_K for the attentionvw and feed_forwardw2 tensors GGML_TYPE_Q2_K for the. Chat with Llama 2 Chat with Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets. One-liner to run llama 2 locally using llamacpp It will then ask you to provide information about the Llama 2 Model you want to run Please enter the Repository ID default. Execute the following command to launch the model remember to replace quantization with your chosen quantization method from the options. Llama2 7B Chat Uncensored Description This repo contains GGML format model files for George Sungs Llama2 7B Chat Uncensored..

Paper Review Llama 2 Open Foundation And Fine Tuned Chat Models Andrey Lukyanenko

If it didnt provide any speed increase I would still be ok with this I have a 24gb 3090 and 24vram32ram 56 Also wanted to know the Minimum CPU. LLaMA-65B and 70B performs optimally when paired with a GPU that has a minimum of 40GB VRAM Suitable examples of GPUs for this. All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have double the context length of Llama 1. Using llamacpp llama-2-13b-chatggmlv3q4_0bin llama-2-13b-chatggmlv3q8_0bin and llama-2-70b-chatggmlv3q4_0bin from TheBloke. If the 7B Llama-2-13B-German-Assistant-v4-GPTQ model is what youre after you gotta think about hardware in two ways..

تعليقات